Loading beautiful scenery...

Tongyuan Bai

Ph.D. Student

ICL Group, School of Artificial Intelligence

Jinlin university

I am a Ph.D. student working at the intersection of artificial intelligence and computer graphics. My current work focuses on generating 3D spatial layouts for indoor and outdoor scene using diffusion models and large language models. My research vision is to automate the creation of diverse 3D scene, particularly those that exist solely in the realm of human imagination, contributing to the development of richly immersive and limitless virtual worlds.

📧baity23@mails.jlu.edu.cn

📍Changchun, China

About Me

My background and expertise

Research Interests

Education

Bachelor

Automation

Tianjin University

graduated in 2018

Master

Control Engineering

Dalian University of Technology

2020 - 2023

Ph.D. Candidate

Artificial Intelligence

Jilin University

2023 - Present

Work Experience

FPGA Radar Engineer

Worked on FPGA-based radar systems development and signal processing.

Leijiu Technology Co., Ltd.

2018 - 2019

Backend Engineer(Intern)

Worked as a backend engineer intern in the Overseas Recommendation System team, responsible for backend development.

ByteDance-Data-AML

2022.6 - 2022.9

Publications

My research contributions and academic publications

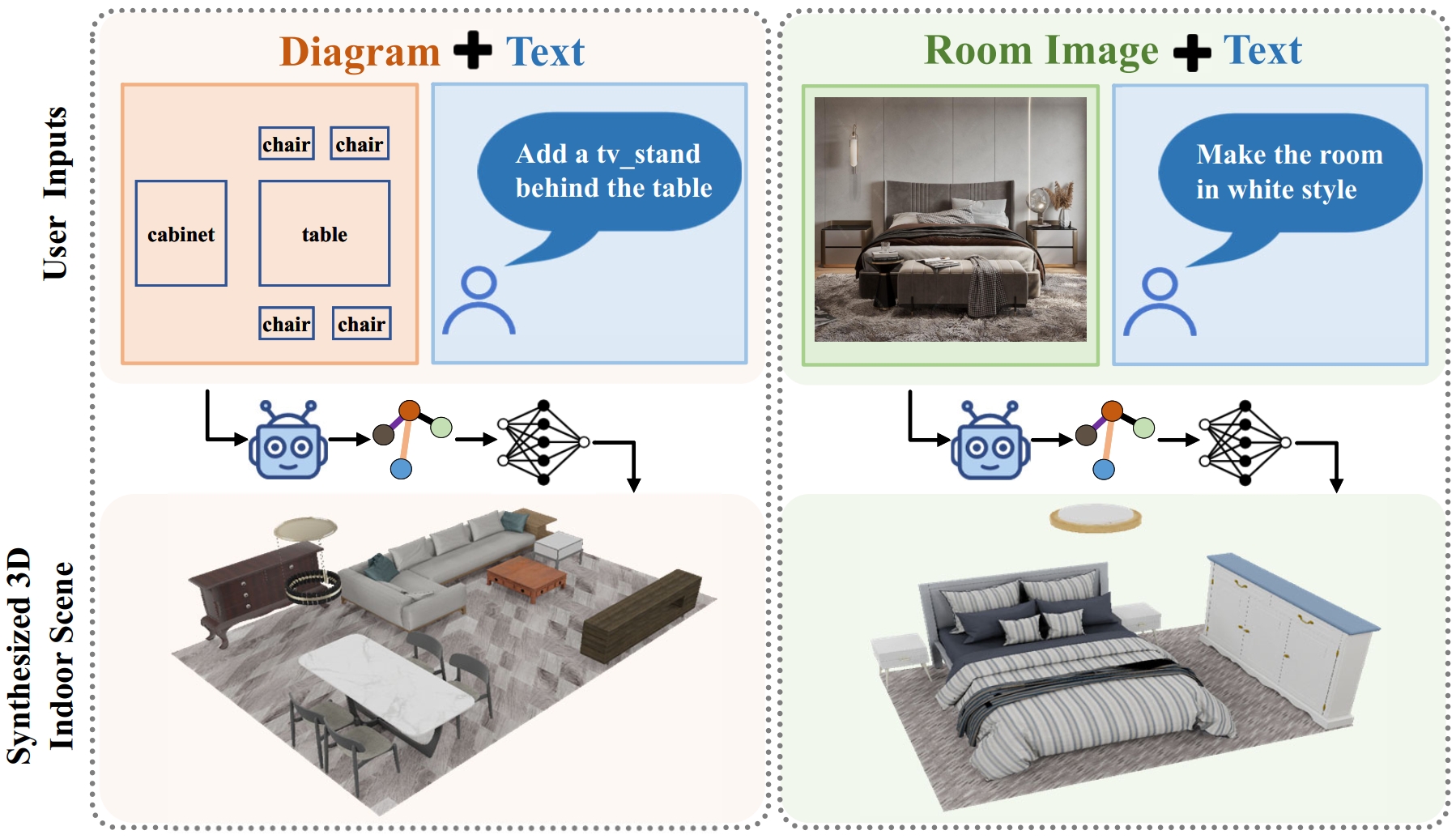

FreeScene: Mixed Graph Diffusion for 3D Scene Synthesis from Free Prompts

CVPR 2025

CCFAConferenceacceptedTongyuan Bai, Wangyuanfan Bai, Dong Chen, Tieru Wu, Manyi Li, Rui Ma

Controllability plays a crucial role in the practical applications of 3D indoor scene synthesis. Existing works either allow rough language-based control, that is convenient but lacks fine-grained scene customization, or employ graph based control, which offers better controllability but demands considerable knowledge for the cumbersome graph design process. To address these challenges, we present FreeScene, a user-friendly framework that enables both convenient and effective control for indoor scene this http URL, FreeScene supports free-form user inputs including text description and/or reference images, allowing users to express versatile design intentions. The user inputs are adequately analyzed and integrated into a graph representation by a VLM-based Graph Designer. We then propose MG-DiT, a Mixed Graph Diffusion Transformer, which performs graph-aware denoising to enhance scene generation. Our MG-DiT not only excels at preserving graph structure but also offers broad applicability to various tasks, including, but not limited to, text-to-scene, graph-to-scene, and rearrangement, all within a single model. Extensive experiments demonstrate that FreeScene provides an efficient and user-friendly solution that unifies text-based and graph based scene synthesis, outperforming state-of-the-art methods in terms of both generation quality and controllability in a range of applications.

SigStyle: Signature Style Transfer via Personalized Text-to-Image Models

AAAI 2025

CCFAConferenceacceptedYe Wang, Tongyuan Bai, Xuping Xie, Zili Yi, Yilin Wang, Rui Ma

Style transfer enables the seamless integration of artistic styles from a style image into a content image, resulting in visually striking and aesthetically enriched outputs. Despite numerous advances in this field, existing methods did not explicitly focus on the signature style, which represents the distinct and recognizable visual traits of the image such as geometric and structural patterns, color palettes and brush strokes etc. In this paper, we introduce SigStyle, a framework that leverages the semantic priors that embedded in a personalized text-to-image diffusion model to capture the signature style representation. This style capture process is powered by a hypernetwork that efficiently fine-tunes the diffusion model for any given single style image. Style transfer then is conceptualized as the reconstruction process of content image through learned style tokens from the personalized diffusion model. Additionally, to ensure the content consistency throughout the style transfer process, we introduce a time-aware attention swapping technique that incorporates content information from the original image into the early denoising steps of target image generation. Beyond enabling high-quality signature style transfer across a wide range of styles, SigStyle supports multiple interesting applications, such as local style transfer, texture transfer, style fusion and style-guided text-to-image generation. Quantitative and qualitative evaluations demonstrate our approach outperforms existing style transfer methods for recognizing and transferring the signature styles.

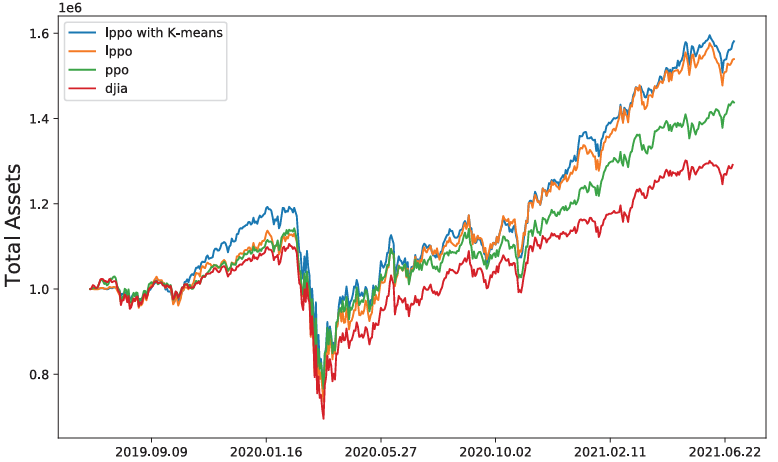

Feature Fusion Deep Reinforcement Learning Approach for Stock Trading

CCC 2022

EIConferenceacceptedTongyuan Bai, Qi Lang, Shifan Song, Yan Fang, Xiaodong Liu

This paper presents a novel feature fusion deep reinforcement learning approach for stock trading. The proposed method combines multiple feature extraction techniques with deep reinforcement learning algorithms to improve trading decision-making in financial markets. By integrating various market indicators and technical features, the approach aims to capture complex market dynamics and enhance trading performance through intelligent automated trading strategies.

News

Latest academic updates and research progress

Our paper accepted to CVPR 2025

Our paper 'FreeScene: Mixed Graph Diffusion for 3D Scene Synthesis from Free Prompts' has been accepted to CVPR 2025!

Learn MoreOur paper accepted to AAAI 2025

Our paper 'SigStyle: Signature Style Transfer via Personalized Text-to-Image Models' has been accepted to AAAI 2025!

Learn More